In today’s world of technology, IT infrastructure plays a critical role in ensuring the smooth operation of IT systems. One key concept that has gained immense popularity in recent years is clustered infrastructure. From improved reliability to enhanced scalability, clustered infrastructure offers a range of benefits that can enhance the performance and efficiency of IT systems.

In this 2 part blog series, we will explore the intricacies of clustered infrastructure and uncover the fundamental aspects that make it an indispensable tool for businesses operating in the digital age.

Let’s first dive into what a Clustered or IT Infrastructure is. An IT or Clustered Infrastructure provides the necessary hardware, software, and network components to support the execution and management of the project’s code. The specific technologies and components used may vary depending on the project’s requirements and the organization’s infrastructure setup. It can include servers, storage devices, networking equipment, virtualization software, and management tools. Since the specific technologies and components used may vary depending on the project’s requirements, we will be going over the basics only.

Section 1: Containers

In different phases of the project, there are different running environments for all purposes such as there will be a “Staging environment” where hot-fixes are provided and tested before deploying those changes on “Production environment”. In another case, there can be a separate environment where “Load-Testing” would be performed in order to keep these tests and their consequences contained from other tasks. How can we ensure that all the different environments are running smoothly and using the same code and dependencies ? The answer is by using “Containers”.

Just as the name suggests, a container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. In simpler words, containers are the solution to “It works on my machine”.

Early on, organizations ran applications on physical servers. There was no way to define resource boundaries for applications in a physical server, and this caused resource allocation issues. For example, if multiple applications run on a physical server, there can be instances where one application would take up most of the resources, and as a result, the other applications would under-perform. A solution for this would be to run each application on a different physical server. But this did not scale as resources were underutilized, and it was expensive for organizations to maintain many physical servers.

Containers solve this problem by being lightweight and they share the host system’s kernel, allowing for efficient utilization of system resources. Multiple containers can run concurrently on a single host, maximizing resource allocation and optimizing hardware usage.

Section 2: Tools for Containerization

Now we know what a Container is, but how do we create, deploy or manage one ? There are many tools designed just for this purpose. We will be going over 2 of the most popular tools in the market.

Docker

Docker is an open-source platform that provides a lightweight, portable, and reliable way to package an application and its dependencies into a single container, which can be run on any system that supports Docker, regardless of the underlying architecture.

Kubernetes (K8s)

Kubernetes, also refered to as K8s, is an open-source container orchestration system for automating the deployment, scaling, and management of containerized applications.

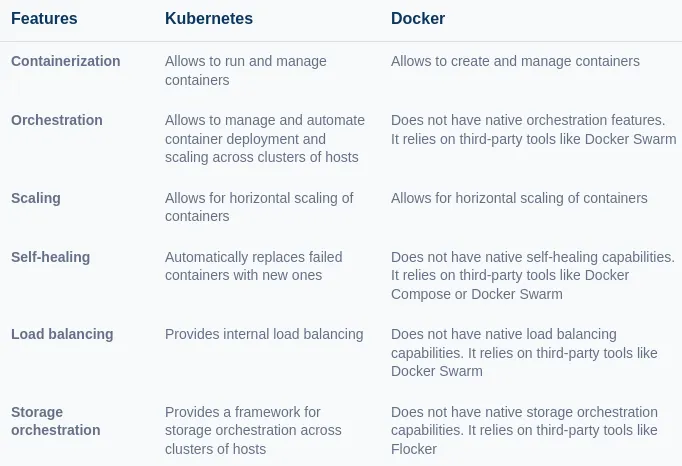

Docker vs Kubernetes

Time for a quick comparison between the two tools mentioned above.

Since Kubernetes allows us to manage and automate cluster deployment and scaling unlike docker which requires third-party tools, we will be focusing on Kubernetes and with that diving deeper into the clutered infrastructure.

Section 3: Clusters

A Kubernetes (K8s) cluster is a grouping of nodes (that run containerized apps in an efficient, automated, distributed, and scale-able manner. K8s clusters allow engineers to orchestrate and monitor containers across multiple physical, virtual, and cloud servers. This decouples the containers from the underlying hardware layer and enables agile and robust deployments.

Section 4: Cluster Key Components

Pods

Pods are the smallest deploy-able units in Kubernetes, containing one or more containers that share the same network namespace and storage volumes. Pods are designed to be ephemeral and can be easily replaced or scaled up/down based on application demands.

Workloads

The applications that Kubernetes runs are called workloads. A workload can be a single component or several discrete components working together. Within a K8s cluster, a workload is run across a group of pods.

Nodes

These are the actual resources, like CPU and RAM, which workloads run on top of. The real-world source of these “hardware” resources can be a virtual machine, on-premises physical server, or cloud infrastructure, but regardless of the underlying source nodes are what represent the resources in a K8s cluster.

Now, let’s define the relationship between Pods, Nodes and workloads.

Pods are an abstraction of executable code, nodes are abstractions of computer hardware. Nodes pool their individual resources together to form a powerful machine or cluster. When an application is deployed onto a cluster, Kubernetes automatically distributes workloads across individual nodes. If nodes are added or removed, the cluster will then redistribute work.

Section 5: Conclusion

So far we have gone through the basic components of an IT infrastructure. We’ve explored the significance of containers in ensuring smooth and consistent operation across different environments, along with an in-depth analysis of popular containerization tools such as Docker and Kubernetes. Furthermore, the blog has elucidated the crucial role of Kubernetes clusters in automating the management and scaling of containerized applications. Understanding key components such as pods, workloads, and nodes within a K8s cluster.

In the next part of this blog series, we will be further looking into the infrastructure and technologies used such as Load balancing, Auto scaling, and High Availability. Stay tuned…